Multimodal AI Of course! Let’s break down Multimodal AI in a comprehensive and easy-to-understand way.

What is Multimodal AI?

- In simple terms, Multimodal AI is artificial intelligence that can process, understand, and generate information across multiple “modes” or types of data.

- Think about how humans experience the world: we see (vision), hear (audio), speak (language), and touch (sensory). Our brain seamlessly combines these signals to form a rich, coherent understanding. Multimodal AI aims to replicate this by building models that aren’t limited to just one type of input.

- Unimodal AI: An AI that only works with text (like ChatGPT originally) or only with images (like an object detector).

- Multimodal AI: An AI that can take both a picture and a text question as input, and then generate a text answer.

The Core Idea: Fusion is Key

- The magic and the main technical challenge of Multimodal AI lie in “fusion”—the process of combining the information from different modalities into a single, unified understanding.

- There are different levels and techniques for fusion:

- Early Fusion: Combining raw data from different modalities before processing. (Less common, as it’s very complex).

- Intermediate Fusion: Processing each modality separately at first, then merging the features in the middle layers of a neural network.

- Late Fusion: Processing each modality completely independently and then combining the final results or decisions. (Like averaging the predictions of two separate models).

Why is Multimodal AI a Big Deal?

- It represents a massive leap towards more general, human-like intelligence for several reasons:

- Richer Understanding: A picture of a cat is one thing. A picture of a cat with the text “This is my grumpy cat, Mittens” provides context and emotion that neither modality has alone.

- Enhanced Robustness and Accuracy: Different modalities can provide complementary information. If an audio model is unsure if a sound was “bare” or “bear,” a vision model confirming the presence of a large animal can resolve the ambiguity.

- Broader Applications: It unlocks a world of possibilities that unimodal systems simply cannot handle, from describing photos for the visually impaired to creating interactive educational tools.

- More Natural Human-Computer Interaction: Our interaction with the world is multimodal. For AI to be a true assistant, it needs to understand our spoken commands while also seeing what we see.

Key Examples and Applications

You’ve likely already interacted with early forms of Multimodal AI:

- GPT-4V (Vision) and Gemini Pro Vision: These models can take an image as input and answer questions about it, identify objects, explain jokes, or even read text from the image.

- Example: You upload a photo of your fridge’s contents and ask, “What can I cook with these ingredients?”

- Self-Driving Cars: The quintessential multimodal system. They fuse data from cameras (vision), LiDAR (3D depth sensing), radar (speed and distance), and GPS (location) to understand the driving environment and make decisions.

- Medical Diagnosis: AI can analyze a medical scan (image), a patient’s medical history (text), and lab results (structured data) to assist a doctor in making a more accurate diagnosis.

- Content Moderation: A platform can use AI to analyze a video by looking at the visual content, the audio for hate speech, and the comments (text) to flag harmful content more effectively than any single system.

- AI-Generated Content (AIGC): Tools like Midjourney and DALL-E are multimodal in reverse: they take a text prompt (one modality) and generate an image (another modality).

Major Challenges

Building these systems is incredibly difficult:

- Alignment: How do you map the concept of a “dog” from an image to the word “dog” in a text embedding? The model must learn a shared, aligned representation space.

- Data Scarcity: While there’s a lot of text data and image data on the internet, high-quality, labeled datasets containing aligned multimodal data (e.g., images with accurate, detailed captions) are harder to find.

- Complexity and Cost: Training models that process multiple high-dimensional data types (like video) requires immense computational power and is very expensive.

- Handling Missing Modalities: What should the model do if one input modality is missing? A robust system should still be able to function, albeit with potentially reduced confidence.

The Future of Multimodal AI

- The field is moving rapidly, and the future is exciting:

- More Modalities: Beyond text, vision, and audio, future models will incorporate touch (haptics), smell (olfaction), and even physiological data.

- Embodied AI: Multimodal models will be placed in robots, allowing them to interact with the physical world—seeing, hearing, and manipulating objects to perform tasks.

- Seamless Human-AI Collaboration: AI will act as a true partner, understanding complex, multimodal instructions like “Can you edit that video to make the sunset look more dramatic and set it to this song?”

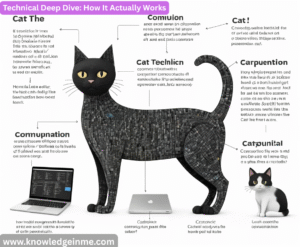

Technical Deep Dive: How It Actually Works

- The core technical problem is representation learning and alignment. How do you get a computer to understand that the pixels of a cat and the word “cat” are related?

The Transformer Backbone:

- Most modern Multimodal AI systems are built on the Transformer architecture, the same technology that powers large language models (LLMs) like GPT. Transformers are excellent at handling sequences and finding relationships between elements, whether those elements are words or patches of an image.

The Encoder-Process-Generate Flow:

Let’s take a model like GPT-4V as an example:

- Step 1: Encoding (Turning Modalities into a Common “Language”)

- Text Encoder: The text prompt (“What’s in this image?”) is broken into tokens and converted into a sequence of number vectors (embeddings).

- Vision Encoder: The image is split into patches. Each patch is then linearly projected into a vector. Critically, these image vectors are transformed into the same dimensional space as the text vectors. This is the first step towards alignment.

- Other Modalities: Audio can be converted into a spectrogram and then into patches, similar to images.

Step 2: Fusion and Joint Processing (The “Magic” Layer)

- This is where the modalities meet. The sequence of image patch vectors and text token vectors are combined into a single, long sequence.

This combined sequence is fed into the Transformer model. The self-attention mechanism allows every image patch to “attend to” every word, and vice-versa. The model learns cross-modal relationships, like associating the image patch containing a furry animal with the word “cat” in the question.

Step 3: Decoding/Generation (Producing the Output)

- Based on this fused, cross-modal understanding, the model’s decoder (if it’s a generative model) predicts the next word in the answer, one token at a time. The output is typically text, but it could be other modalities (like generating an image or speech).