Generative AI they have been trained on. Unlike traditional AI systems that are designed for classification or prediction, generative AI creates original outputs that mimic human-like creativity.

Key Aspects of Generative AI:

- Foundation Models – Many generative AI systems are built on large-scale neural networks trained on vast datasets, such as:

- Large Language Models (LLMs) (e.g., Open AI’s GPT-4, Google’s Gemini, Meta’s LLAMA) for text generation.

- Diffusion Models (e.g., Stable Diffusion, DALL·E, Mid Journey) for image generation.

- How It Works – Generative AI learns patterns from training data and uses probabilistic methods to predict and generate new content. For example:

- A text model predicts the next word in a sequence.

- An image model generates pixels based on a text prompt.

Applications:

- Text Generation: Chatbots (Chat GPT), content writing, code generation (GitHub Copilot).

- Image & Video: AI art, deep fakes, game asset creation.

- Audio & Music: AI voice synthesis (Eleven Labs), music composition (Jukebox).

- Healthcare: Drug discovery, synthetic medical data.

- Business: Personalized marketing, automated customer support.

Challenges & Risks:

- Bias & Misinformation: Models can reflect biases in training data or generate false information (“hallucinations”).

- Deep fakes & Misuse: Malicious use for scams, fake news, or impersonation.

- Copyright Issues: Debate over whether AI-generated content infringes on human creators’ rights.

Future Trends:

- Multimodal AI (models that process text, images, and audio together, like GPT-4V).

- Smaller, More Efficient Models (e.g., quantization, LORA fine-tuning).

- Regulation & Ethics: Governments are developing policies (EU AI Act, U.S. executive orders) to manage AI risks.

Core Technologies Behind Generative AI

- Generative AI relies on several key architectures and techniques:

Transformer Models for Text & More

- How it works: Uses self-attention mechanisms to process sequences (e.g., words in a sentence).

Examples:

- GPT (Generative Pre-trained Transformer) – Autoregressive (predicts next token).

- BERT (Bidirectional Encoder Representations) – Not generative, but foundational for understanding context.

- Use Cases: Chatbots, translation, summarization.

Diffusion Models for Images, Video

How it works:

- Uses a forward process (adding noise) and reverse process (denoising).

Examples:

- Stable Diffusion (open-source)

- DALL·E 3 (Open AI)

- Mid Journey (proprietary, high-quality art)

Variational Autoencoders VAEs & GANs Older but Still Relevant

- GANs (Generative Adversarial Networks):

- Two neural networks (Generator vs. Discriminator) compete to create realistic outputs.

- Used for deep fakes, art, and synthetic data.

- VAEs: Learn a compressed representation of data and generate new samples.

Multimodal Models Combining Text, Images, Audio

Examples:

- GPT-4V (Vision) – Can analyze and generate text from images.

- Google Gemini – Natively multimodal (text, images, audio).

2. How Generative AI Works Step by Step

Training Phase

- Data Collection: Massive datasets (e.g., Wikipedia, books, images, code).

- Preprocessing: Tokenization (for text), normalization (for images).

Model Training:

- Self-supervised learning: Predicts missing parts of data (e.g., next word in a sentence).

- Fine-tuning: Adjusts model for specific tasks (e.g., medical Q&A).

Inference (Generation) Phase

- Text Generation: Uses sampling (e.g., greedy, beam search, temperature control).

3. Cutting-Edge Applications

Creative Industries

- AI Art: Tools like Mid Journey, Stable Diffusion.

Music & Voice:

- SUNO AI (generates full songs from text).

- Eleven Labs (hyper-realistic AI voices).

Video Generation:

- Runway ML, Pi ka Labs – Text-to-video.

- Sora (Open AI) – High-quality video generation (not yet public).

Business & Productivity

- Code Generation: GitHub Copilot (based on Open AI’s Codex).

- Marketing: AI-generated ads, personalized content.

- Legal & Finance: Drafting contracts, summarizing reports.

Science & Medicine

- Drug Discovery: AI designs new molecules (e.g., Alpha Fold for protein folding).

- Medical Imaging: Synthetic data for training models.

4. Ethical Concerns & Challenges

Issue Examples Possible Solutions

Bias Models amplify stereotypes (e.g., gender/racial bias). Better datasets, fairness audits.

Misinformation Deep fakes, fake news. Watermarking, detection tools.

Copyright Who owns AI-generated content? New IP laws, opt-out policies.

Job Disruption Writers, artists, coders affected. Upskilling, AI-human collaboration.

Energy Use Large models consume massive compute. Efficient architectures (e.g., Mixture of Experts).

5. The Future of Generative AI

Key Trends

- Smaller, More Efficient Models:

- LORA (Low-Rank Adaptation) – Fine-tuning with fewer resources.

- Quantization – Reducing model size for edge devices.

- Agentic AI: AI that can autonomously perform tasks (e.g., Auto GPT).

- Regulation: Governments are stepping in (EU AI Act, U.S. executive orders).

Long-Term Possibilities

- Artificial General Intelligence (AGI)? – Some believe generative AI is a step toward AGI.

- Personalized AI Assistants – Fully autonomous agents handling work/life tasks.

- AI in Education – Custom tutors for every student.

- Training Secrets & Optimization Tricks

Scaling Laws Chinchilla Paper

- Optimal model performance depends on:

- Performance

- Dataset Size, Model Size, Compute

- Performance (Dataset Size, Model Size, Compute)

- Key Insight: For a fixed compute budget, balance model size and training tokens.

- Example: LLAMA 2 outperforms larger models by training longer on more data.

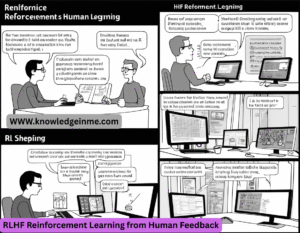

RLHF Reinforcement Learning from Human Feedback

- Step 1: Supervised fine-tuning on human-written answers.

- Step 2: Train a reward model to rank outputs.

- Step 3: Use PPO (Proximal Policy Optimization) to align the model with human preferences.

- Why it matters: Makes models like Chat GPT less toxic/more helpful.

Mixture of Experts MOE

- Only activate a subset of model weights per input (e.g., GPT-4 uses ~220B params but only ~55B per query).

- Saves compute: Enables massive models without proportional cost.

Quantization & Distillation

- Quantization: Store weights in 4-bit instead of 16-bit (e.g., GPTQ, GGUF formats).

- Distillation: Train smaller “student” models to mimic larger “teacher” models (e.g., Distil BERT).

3. Unsolved Problems & Active Research

Problem Why It’s Hard Current Approaches

Hallucinations Models optimize for plausibility, not truth. Retrieval-Augmented Generation (RAG), fact-checking layers.

Catastrophic Forgetting Fine-tuning erases old knowledge. LORA, memory-augmented networks.

Long Context Transformers struggle with >1M tokens. Hyena (long-context architectures), Ring Attention.

Energy Efficiency Training GPT-4 emits ~500 tons of CO₂. Sparse models, neuromorphic chips.

Multimodal Alignment Text + images + audio don’t perfectly fuse. Cross-attention layers (e.g., Flamingo).

4. Speculative Future: 2025–2030

Next-Gen Architectures

- Ret Net (Retentive Networks): Could replace Transformers with O(1) inference cost.

AI-Native Hardware

- Optical Neural Networks: Light-based chips for faster inference.

- Neuromorphic Computing: Brain-inspired chips (e.g., Intel Loihi).

Agentic AI

- Auto GPT-style Agents: AI that recursively plans and executes tasks.

- Self-Improving Models: AI fine-tuning its own weights (see AlphaGo Zero).

Societal Shifts

- Universal Basic Income (UBI): Debates intensify as AI disrupts jobs.

- AI-Generated Everything: >50% of internet content could be synthetic by 2030 (per GPT-5+).

5. DIY: How to Train Your Own Generative AI

For Text (LLMs)

- Dataset: The Pile, Common Crawl.

- Framework: Hugging Face Transformers + PYTORCH.

- Training: Use LORA to fine-tune LLAMA 3 on a single GPU.

For Images (Diffusion)

- Dataset: LAION-5B (public images).

- Framework: Diffusers library + Stable Diffusion.

- Trick: Use Dream booth for personalized generation.

For Music

- Tools: Open AI’s Jukebox, Meta’s Music Gen.

- Dataset: Free Music Archive (FMA).