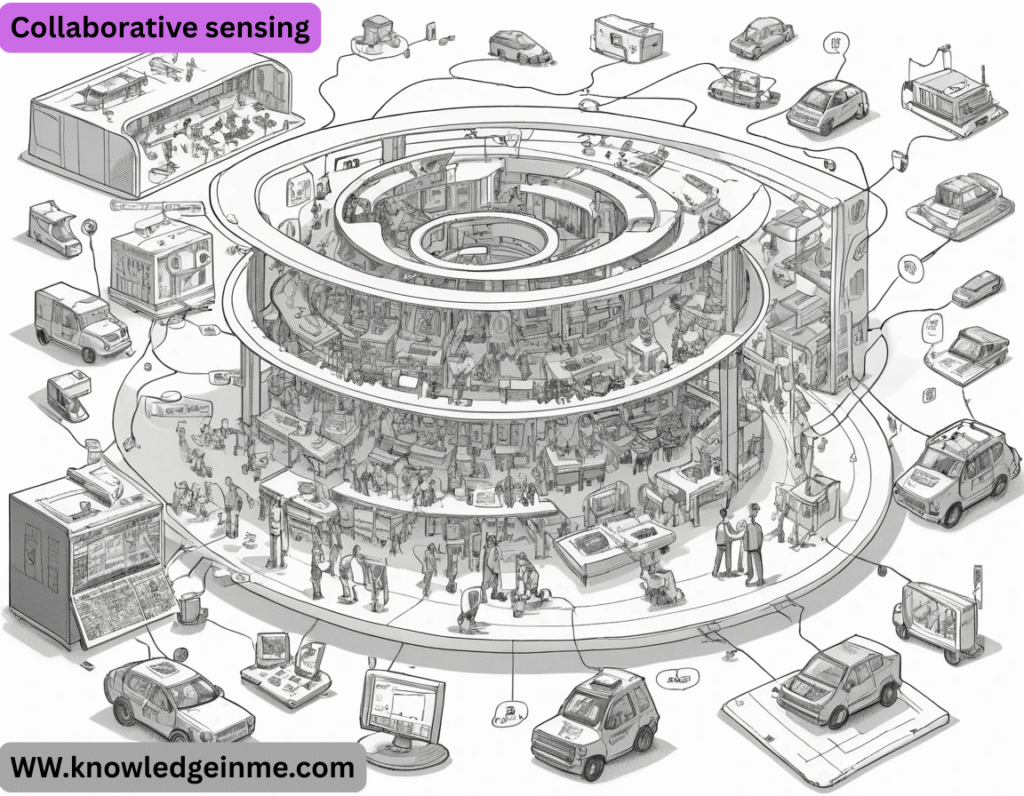

Collaborative sensing Collaborative Sensing refers to the process where multiple sensing devices or agents work together to gather, share, and process data to achieve a common objective. This approach enhances the accuracy, coverage, and robustness of sensing tasks compared to relying on a single sensor or isolated systems. Collaborative sensing is widely used in fields like IoT (Internet of Things), autonomous vehicles, environmental monitoring, robotics, and smart cities.

Key Aspects of Collaborative Sensing

Multi-Agent Cooperation:

- Multiple sensors or devices (e.g., drones, robots, IoT nodes) collaborate to collect and share data.

- Example: A swarm of drones mapping a disaster area together.

Data Fusion & Aggregation

- Combining data from multiple sources improves accuracy and reduces uncertainty.

- Techniques like Kalman filtering, Bayesian inference, or deep learning are used.

Distributed Processing

- Instead of relying on a central server, edge devices process data locally and share insights.

- Reduces latency and bandwidth usage (e.g., federated learning in sensor networks).

Adaptive Sensing

- Sensors dynamically adjust their behavior based on shared information.

- Example: Traffic cameras coordinating to track a vehicle across a city.

Energy Efficiency & Resource Sharing

- Collaborative sensing can optimize power usage by distributing tasks.

- Example: Wireless sensor networks taking turns to transmit data.

Applications of Collaborative Sensing

- Autonomous Vehicles: Cars sharing real-time road condition data.

- Environmental Monitoring: Distributed sensors tracking air quality or wildlife movements.

- Smart Cities: Traffic lights, cameras, and IoT devices optimizing urban flows.

- Disaster Response: Drones and robots collaborating in search-and-rescue missions.

- Healthcare: Wearable devices sharing patient data for better diagnostics.

Challenges

- Communication Overhead: Ensuring reliable data exchange among sensors.

- Security & Privacy: Preventing malicious actors from manipulating shared data.

- Scalability: Managing large-scale collaborative networks efficiently.

- Heterogeneity: Integrating different types of sensors (e.g., LiDAR, cameras, radar).

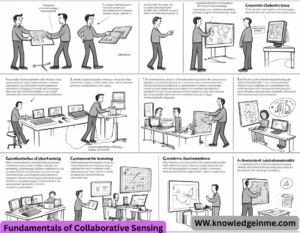

Fundamentals of Collaborative Sensing

1.1 Core Principles

- Spatial & Temporal Coverage: Multiple sensors cover larger areas and longer time spans than a single sensor.

- Redundancy & Fault Tolerance: If one sensor fails, others compensate, improving reliability.

- Diversity of Sensing Modalities: Different sensors (e.g., cameras, LiDAR, radar) provide complementary data.

- Distributed Intelligence: Instead of centralized processing, edge devices collaborate for faster responses.

1.2 Key Components

- Sensing Nodes: Devices (drones, robots, IoT sensors) that collect data.

- Communication Network: Wireless (Wi-Fi, 5G, LoRa) or wired connections for data sharing.

- Fusion Algorithms: Methods to combine data (e.g., Kalman filters, deep learning).

- Control & Coordination: Protocols for task allocation and synchronization.

Architectures for Collaborative Sensing

- Different system designs optimize performance based on application needs:

2.1 Centralized Architecture

- How it works: All sensors send data to a central server for processing.

- Pros: Simple to implement, high computational power.

- Cons: Single point of failure, high latency, bandwidth-intensive.

- Example: Traditional surveillance systems with a central control room.

2.2 Decentralized Architecture

- How it works: Sensors process data locally and share only relevant insights.

- Pros: Lower latency, better scalability, resilient to failures.

- Cons: Complex coordination, potential inconsistencies.

- Example: Autonomous vehicles exchanging V2X (Vehicle-to-Everything) data.

2.3 Hybrid Architecture

- How it works: Combines centralized and decentralized approaches.

- Pros: Balances efficiency and robustness.

- Cons: Requires careful optimization.

- Example: Smart city traffic management where edge devices pre-process data before sending summaries to a cloud server.

2.4 Swarm & Ad-Hoc Networks

- How it works: Self-organizing networks (e.g., drone swarms, mesh sensor networks).

- Pros: Highly scalable, adaptable to dynamic environments.

- Cons: Requires advanced coordination algorithms.

- Example: Search-and-rescue drones forming an ad-hoc network.

3.2 Task Allocation & Coordination

- Auction-Based Methods: Sensors bid for tasks (e.g., which drone covers which area).

- Consensus Algorithms: Ensure all agents agree on a decision (e.g., distributed SLAM).

- Game Theory Approaches: Optimize cooperation vs. competition among agents.

3.3 Edge Computing & In-Network Processing

- Instead of sending raw data, sensors pre-process and extract features (e.g., detecting anomalies locally).

- Reduces bandwidth and latency (critical for real-time applications).

Advanced Applications

4.1 Autonomous Vehicles & V2X Networks

- Example: A car shares icy road detection with nearby vehicles via 5G.

4.2 Smart Cities & IoT

- Streetlights, cameras, and air quality sensors work together for urban monitoring.

- Example: Adaptive traffic signals adjusting in real-time based on congestion data.

4.3 Environmental & Disaster Monitoring

- Drones, satellites, and ground sensors track wildfires, floods, or pollution.

- Example: UAV swarms mapping a forest fire and predicting its spread.

4.4 Healthcare & Wearables

- Medical wearables (ECG, SpO2 sensors) share data for early disease detection.

- Example: Smartwatch detecting irregular heartbeats and alerting a hospital.

4.5 Industrial IoT (LLOT) & Robotics

- Factory robots collaborate for precision assembly using shared sensor data.

- Example: AGVs (Automated Guided Vehicles) coordinating in a warehouse.

Challenges & Open Problems

5.1 Technical Challenges

- Scalability: Managing thousands of sensors efficiently.

- Latency & Real-Time Processing: Ensuring fast decision-making in critical applications.

- Heterogeneity: Integrating different sensor types (thermal, LiDAR, RF).

- Energy Efficiency: Prolonging battery life in distributed sensing networks.

5.2 Security & Privacy Risks

- Data Integrity: Preventing false data injection attacks.

- Privacy Leakage: Ensuring sensitive data (e.g., healthcare) isn’t exposed.

- Adversarial Attacks: AI models in collaborative sensing can be fooled by malicious inputs.

5.3 Standardization & Interoperability

- Lack of universal protocols for cross-platform collaboration.

- Efforts like Open Fog Consortium and 5GAA aim to address this.

Theoretical Foundations

1.1 Information Fusion Theory

- Collaborative sensing relies on multi-sensor data fusion, which can be categorized into:

- Low-Level (Raw Data Fusion): Direct combination of sensor readings (e.g., LiDAR point clouds + camera pixels).

- Feature-Level Fusion: Extracted features (edges, textures) are merged before processing.

- Decision-Level Fusion: Each sensor makes local decisions, combined later (e.g., voting systems).

Key Mathematical Frameworks:

- Random Finite Sets (RFS): Used in multi-target tracking (e.g., tracking pedestrians with overlapping sensors).

1.2 Graph-Based Collaborative Sensing

- Graph Neural Networks (GNNs) enable decentralized inference (e.g., traffic prediction in smart cities).

- Consensus Algorithms ensure sensors agree on global states (e.g., distributed Kalman filtering).

1.3 Information-Driven Sensor Control

- Sensor Selection Problem: Choosing which sensors to activate to maximize information gain while minimizing energy.

- Solved via Reinforcement Learning (RL) or Submodular Optimization.

Advanced Algorithms 2023–2025 Research

2.1 Federated Learning for Collaborative Sensing

- Problem: Train AI models without sharing raw data (privacy-preserving).

New Approaches:

- Split Learning: Sensors compute partial neural network layers, reducing computation.

- Federated Reinforcement Learning (FRL): Multiple agents learn policies collaboratively (e.g., UAV swarms).

2.2 Neuromorphic Collaborative Sensing

- Bio-Inspired Sensors: Event cameras + spiking neural networks (SNNs) for ultra-low-power sensing.

- Applications: High-speed robotics (e.g., drones avoiding dynamic obstacles).

2.3 Quantum Collaborative Sensing

- Quantum Radar: Multiple quantum-entangled sensors achieve super-resolution.

- Quantum Key Distribution (QKD): Secure communication for military/medical sensing.

2.4 Self-Supervised Collaborative Learning

- Sensors learn representations from unlabeled data (e.g., contrastive learning for weather prediction).

- Example: Cameras + LiDAR learning cross-modal features without manual annotation.

Hardware Innovations

3.1 Reconfigurable Intelligent Surfaces (RIS)

- Smart Surfaces (e.g., walls, mirrors) act as passive sensors/relays, improving coverage.

- 6G Integration: RIS enhances mm Wave/THz communication for collaborative sensing.

3.3 Soft & Swarm Robotics

- Soft Sensors: Stretchable electronics for wearable collaborative sensing.

- Robot Swarms: Kilo bots, DNA-based nanorobots for environmental monitoring.

Next-Gen Applications

4.1 Collaborative Sensing in Metaverse

- Digital Twins: Real-time sensor networks mirroring physical worlds (e.g., factory digital twin).

- Haptic Feedback Collaboration: Wearables sharing touch data for VR/AR interactions.