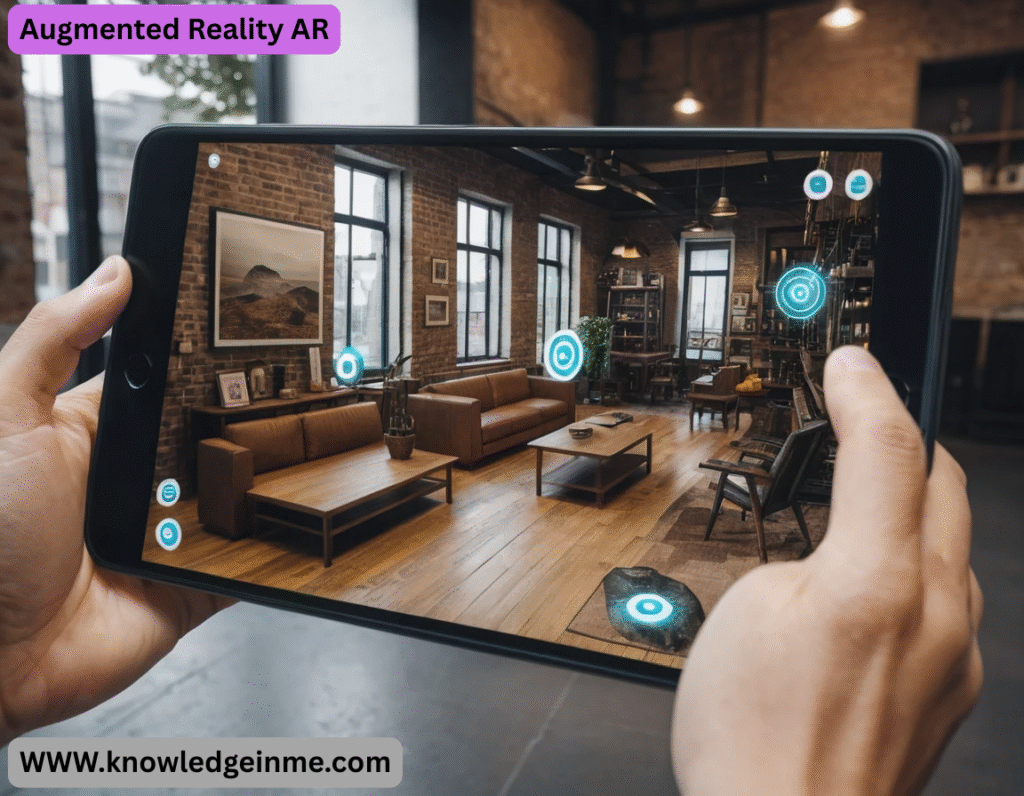

Augmented Reality AR Unlike Virtual Reality (VR), which creates a fully immersive digital environment, AR blends virtual elements with the real world in real time.

Key Features of AR:

- Real-Time Interaction: AR integrates digital content with the physical world instantly.

- Environment Recognition: Uses cameras, sensors, and algorithms to detect surfaces, objects, and lighting.

- Device Compatibility: Works on smartphones, tablets, smart glasses (e.g., Microsoft HoloLens, Magic Leap), and AR headsets.

- User Engagement: Enhances experiences in gaming, education, retail, and more.

How AR Works:

- Sensing: Cameras and sensors scan the environment.

- Processing: Software (e.g., AR Kit for iOS, AR Core for Android) interprets the data.

- Projection: Digital content is overlaid onto the real world via a display.

Applications of AR:

- Gaming: Pokémon GO, Harry Potter: Wizards Unite

- Retail: Virtual try-ons (IKEA Place, Sephora Virtual Artist)

- Education: Interactive 3D models for learning (Google Expeditions)

- Healthcare: Surgical navigation, medical training

- Navigation: AR directions (Google Maps Live View)

- Marketing & Advertising: Interactive ads and product demos

- Industrial Use: Maintenance assistance, remote collaboration

Challenges in AR:

- Hardware Limitations: Battery life, processing power, and display quality.

- Privacy Concerns: Cameras and sensors may collect sensitive data.

- User Adoption: Requires widespread acceptance of AR wearables.

Types of AR

AR can be categorized based on how digital content is integrated into the real world:

Marker-Based AR Image Recognition

- How it works: Uses predefined markers (QR codes, images) to trigger digital overlays.

- Example: Scanning a QR code to view a 3D model.

Markerless AR Location-Based or SLAM

- How it works: Uses GPS, accelerometers, and Simultaneous Localization and Mapping (SLAM) to place digital objects in the real world without markers.

- Example: Pokémon GO (location-based), furniture placement apps like IKEA Place.

Projection-Based AR

- How it works: Projects light onto surfaces to create interactive holograms.

- Example: Industrial AR for machinery repair instructions.

Superimposition-Based AR

- How it works: Replaces or enhances real-world objects with digital versions.

- Example: Medical AR for overlaying X-ray data on a patient.

Outlining AR Contour-Based

- How it works: Uses object recognition to highlight boundaries (e.g., parking lines, facial contours).

- Example: BMW’s AR windshield for lane detection.

Core Technologies Behind AR

- AR relies on multiple technologies working together:

Hardware Components

- Cameras & Sensors: Depth sensors (LiDAR), RGB cameras, infrared.

- Processors: AI chips (e.g., Apple’s Neural Engine) for real-time rendering.

- Displays: Smartphone screens, AR glasses (e.g., Microsoft HoloLens), or projectors.

- Tracking Systems: IMUs (Inertial Measurement Units), eye tracking.

Software & Algorithms

- Computer Vision: Detects surfaces, objects, and lighting.

- SLAM (Simultaneous Localization and Mapping): Maps environments in real time.

- 3D Rendering Engines: Unity, Unreal Engine for AR content.

Development Platforms

- AR Kit (Apple) – iOS AR development.

- AR Core (Google) – Android AR development.

- Vuforia – Marker-based AR SDK.

- Open XR – Cross-platform AR/VR standard.

Industry-Specific Use Cases

Healthcare

- Surgical AR: Surgeons see real-time patient data during operations (AccuVein for vein visualization).

- Medical Training: 3D anatomy models for students.

Retail & E-Commerce

- Virtual Try-Ons: Sephora’s Virtual Artist, War by Parker glasses try-on.

- AR Shopping: Amazon’s Room Decorator for furniture.

Manufacturing & Maintenance

- Remote Assistance: Technicians get AR-guided repairs (Scope AR).

- Assembly Line AR: Workers see step-by-step instructions.

Education & Training

- Interactive Learning: AR textbooks with 3D models.

- Military Training: Simulated battlefield scenarios.

Automotive

- AR Dashboards: Navigation arrows on windshields (HUDs).

- Self-Driving Cars: Enhanced object recognition.

Social Media & Entertainment

- AR Filters: Snapchat, Instagram face filters.

- Live Concerts: Virtual artists performing in AR (Travis Scott in Fortnite).

Future Trends in AR

AR Glasses Going Mainstream

- Apple Vision Pro, Meta Quest 3, and Ray-Ban Meta smart glasses.

- Lightweight, stylish designs replacing bulky headsets.

AI + AR Integration

- Generative AI creating dynamic AR content (e.g., Chat GPT-powered AR assistants).

- Object Recognition improving with AI (e.g., real-time translation signs).

Web AR Browser-Based AR

- No app needed—AR experiences via web browsers (8th Wall, Web XR).

- Brands using Web AR for marketing (e.g., Pepsi’s AR bus shelter ad).

AR Cloud Persistent AR

Digital objects remain in place for multiple users.

5G & Edge Computing

- Faster data speeds enable real-time cloud AR processing.

- Reduced latency for multiplayer AR games.

5. Challenges & Limitations

Challenge Description

Hardware Constraints AR glasses need better battery life, field of view, and comfort.

Privacy Concerns Cameras in AR devices raise surveillance issues.

Content Ecosystem Lack of killer apps beyond gaming/filters.

Cost High-end AR devices (e.g., HoloLens 2 ~$3,500) are expensive.

Standardization Fragmented platforms (AR Kit vs. AR Core vs. Open XR).

Neuroscience & AR Rewiring Human Perception

Cognitive Load & AR

- Studies show AR can reduce mental effort in complex tasks (e.g., assembly instructions) but may overload users with poorly designed interfaces.

- “Inattentional Blindness” risk: Users focusing on AR overlays might miss real-world hazards.

Spatial Memory Alteration

- AR navigation (e.g., Google Maps AR) may weaken innate wayfinding skills by outsourcing spatial cognition to tech.

Neuroadaptive AR

- Future systems could use EEG or fNIRS to monitor brain activity and adjust AR content in real time (e.g., simplifying data if stress is detected).

Under-the-Hood: Advanced AR Technologies

Photorealistic Rendering

- Diffusion Models: Text-to-3D asset generation (e.g., Open AI’s Point-E) for instant AR content creation.

Haptic Feedback Integration

- Ultrasonic waves (e.g., Ultra haptics) or electro-tactile gloves to simulate touch in AR.

- Applications: “Feeling” virtual buttons or textures in e-commerce.

Semantic Understanding

- Scene ML (Meta) and AR Kit 6’s Room Plan can classify objects semantically (e.g., “this is a sofa, not a table”).

- Enables context-aware AR: A virtual cat jumping onto your real couch, not the floor.

AR in Frontier Industries

Space Exploration

- NASA’s Sidekick project uses HoloLens to guide astronauts on ISS repairs.

- Future Mars missions may deploy AR for navigation in GPS-denied environments.

Quantum AR

- Experimental use of quantum sensors for ultra-precise positioning beyond GPS limitations.

Bio-Integrated AR

- Smart contact lenses (e.g., Mojo Vision) with built-in displays—AR without glasses.

- Neural interfaces: Elon Musk’s Neura link could someday project AR directly into the visual cortex.

Ethical & Societal Dilemmas

Reality Ownership

- Who controls AR layers in public spaces? (E.g., AR graffiti vs. city regulations.)

Deep fake AR

- Malicious use of real-time face swaps in AR video calls or impersonations.

Digital Inequality

- “AR Divide” between those with advanced wearables and those reliant on smartphones.

Psychological Effects

- “AR Addiction” or dissociation from reality, akin to social media’s impact.

Unconventional AR Applications

AR for Animals

- Guide dogs with AR visors highlighting obstacles.

- Livestock management: AR overlays showing health stats on cows.

Climate AR

- Visualizing carbon footprints in real time (e.g., AR glasses showing emissions from cars).

Time-Shifted AR

- Overlaying historical scenes onto modern locations (e.g., seeing ancient Rome in today’s streets).